Blogs & News

Azure MLOps Within the Aridhia DRE

Aridhia has seen an increase in the demand for MLOps within our Digital Research Environment (DRE). MLOps is essential for organisations aiming to operationalise AI effectively and achieve continuous improvement in their Machine Learning workflows.

What is MLOps?

MLOps, short for Machine Learning Operations, is a set of practices, tools, and methodologies designed to streamline and standardise the deployment, monitoring, and management of machine learning models in production. It bridges the gap between data science and IT operations, enabling teams to collaborate effectively and ensure the reliability, scalability, and maintainability of machine learning systems.

Applications of MLOps can be broad, however, in the DRE there are some use cases that are more common:

Medical Imaging

MLOps can be used to develop and deploy ML models that analyse medical images (e.g., X-rays, MRIs) to detect abnormalities, such as tumours or fractures, with high accuracy. This aids radiologists in making faster and more accurate diagnoses.

Drug Discovery and Development

MLOps can accelerate the drug discovery process by analysing vast amounts of biomedical data to identify potential drug candidates. It also helps in optimising clinical trials by predicting patient responses to new treatments. You can find a detailed paper on this area authored by our Chief Science Officer, Jeff Barret and others here.

As an Azure hosted environment, the Aridhia DRE provides out-of-the-box capabilities and integration points to get started with MLOPs. Each workspace has the option of deploying one or more Data Science Virtual Machines (DSVM). A DSVM contains a number of tools that assist directly with deploying MLOps environments such as Jupyter Lab, VS Code, and PyCharm, which can streamline the development and deployment of machine learning models. Each DSVM also comes pre-deployed with MLflow SDK and access to Azure Machine Learning portal.

MLOPs with a GPU VM

The most flexible option for MLOps within the DRE is the use of a DSVM directly with extended DRE configuration. This ensures that the scope and control of all ML activity and data remain within the confines of the DRE entirely. This is a more straightforward approach and makes use of the flexible access to multiple VM configurations available to Aridhia DRE Workspaces.

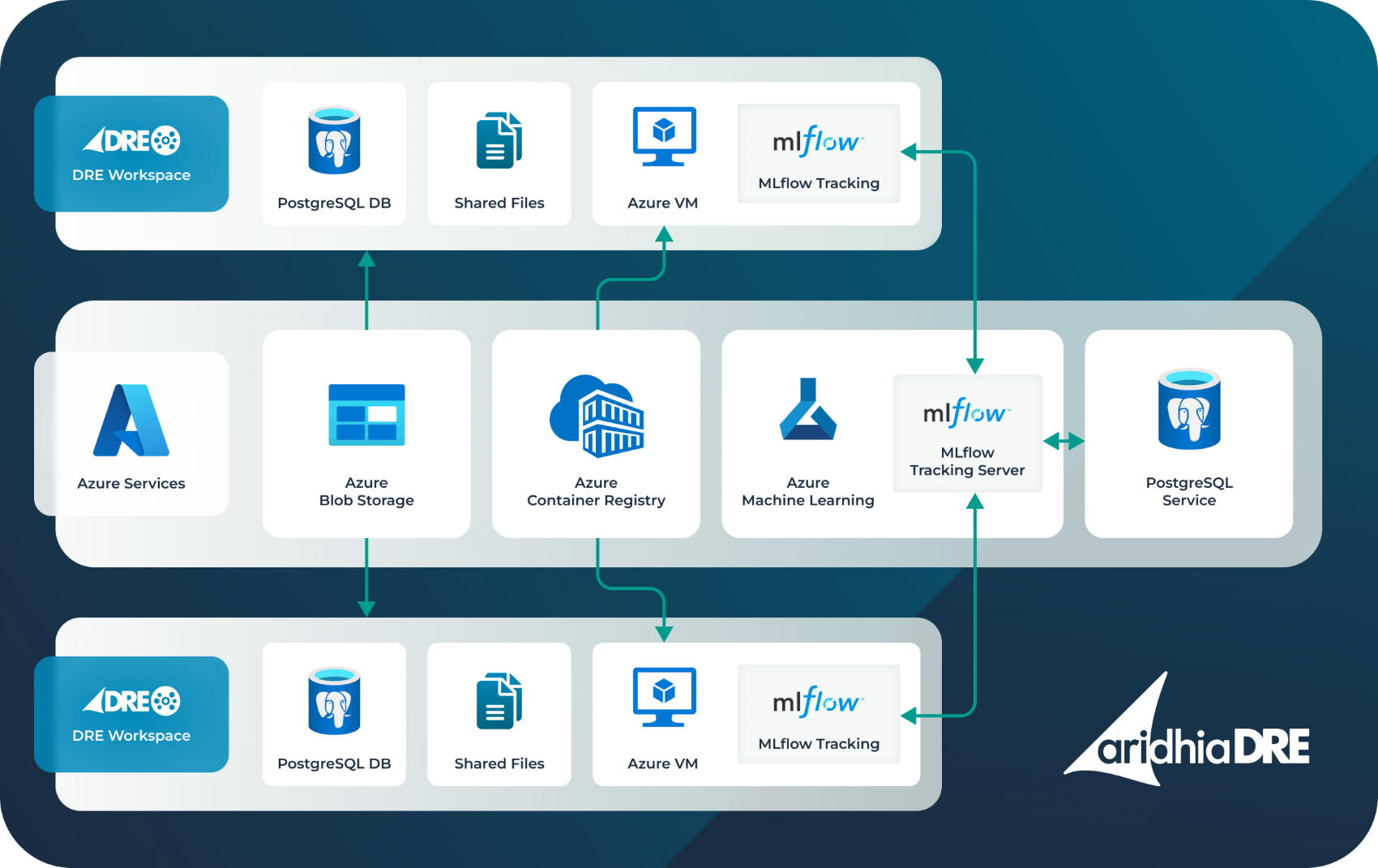

For large compute capacity (often required for training large models) GPU-backed DSVMs are available and accessible from a workspace, with full access to shared file systems, Gitea, and Azure Blob Storage for large volumes of unstructured data, such as medical images. Each workspace includes MLflow Tracking, which connects to a centralised Aridhia DRE-hosted ML Flow Tracking server via client SDK libraries.

A dedicated resource group within the DRE Hub hosts the DRE Hub Azure Container Registry (ACR) for storing the completed containerised models. Azure Blob Storage is used for storing data, which can then be utilised to run the model. Workspaces can share the same Blob Storage to enable data sharing or have separate Blob Storage instances mounted for isolated data access. Additionally, an Azure Database is used to manage MLflow admin logs.

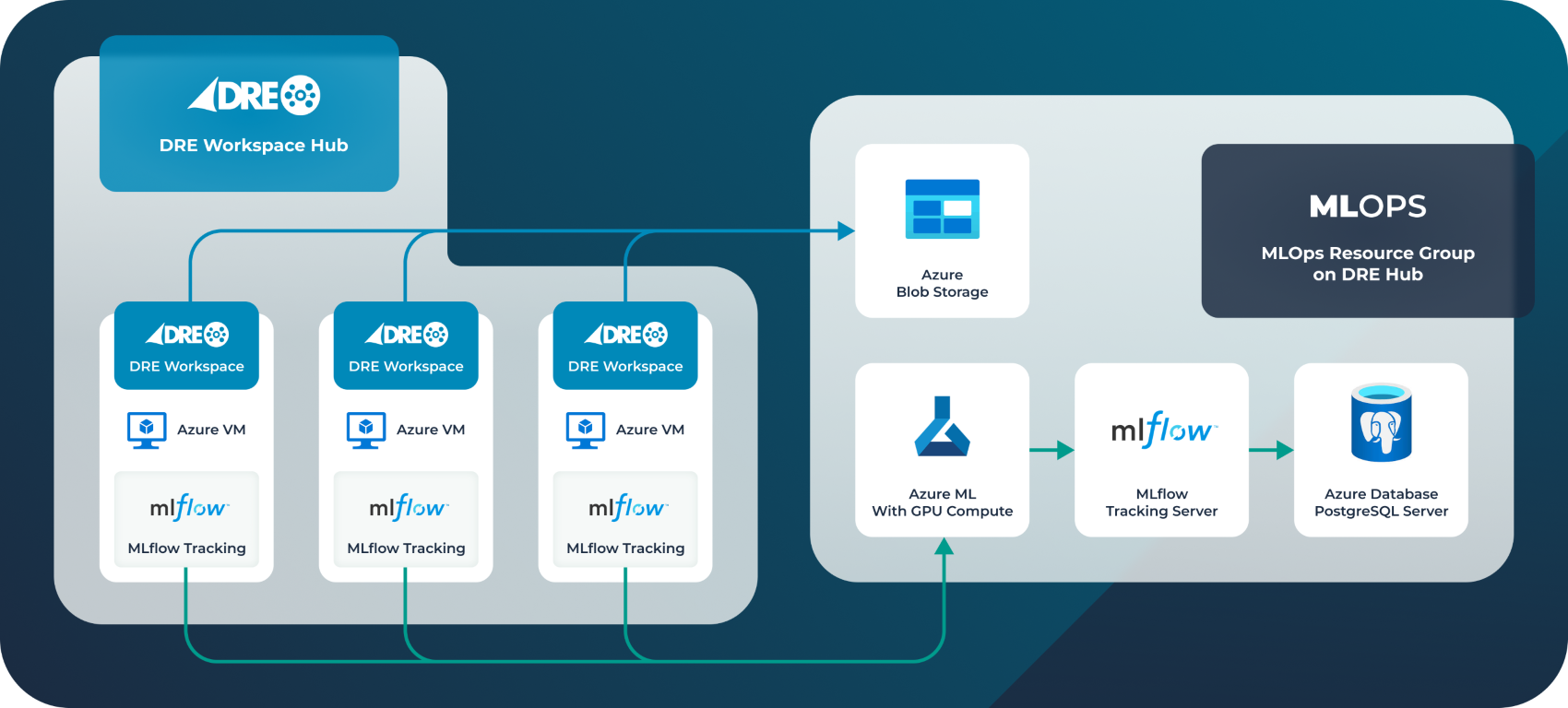

Using Azure ML Compute

It is also possible to make use of Azure ML compute entirely, allowing for smaller VMs for control purposes only. In this example, a dedicated resource group within the DRE Hub provides access to the Azure ML compute. Azure ML also hosts the container registry for the complete models. Azure Blob Storage is used for storing data, which can then be utilised to run the model. Workspaces can either share the same Blob Storage to enable data sharing or have separate Blob Storage instances mounted for isolated data access. Additionally, an Azure Database is used to manage MLflow admin logs.

From an Aridhia DRE workspace, it is possible to implement Machine Learning in several ways, with clear choices on where the compute and control resides, providing maximum flexibility and support for existing tools and processes. Our customer enablement and service desk teams are available to assist with configuration and set up of the environment to ensure our users can concentrate on the research and outcomes.

March 31, 2025

Alicia Gibson

Alicia Gibson joined Aridhia in 2018 and serves as the Chief Project Officer. With over 25 years of experience Alicia is a seasoned leader in project and operational management. With a wealth of experience in both internal and customer-facing projects she excels in aligning projects with the company’s vision. Alicia also leads the Customer Data Engineering team ensuring alignment with customer’s data engineering and data science needs and expectations. Prior to joining Aridhia Alicia worked at multiple Fortune 500 companies mainly in the technology sector and also joined an early-stage software startup. Alicia is a certified Project Manager and holds an MBA.